Natural User Interfaces are great. They aren’t just an input method, but they adapt to who is using them or indeed how they are using them. We could go into the complexes of NUI here but I’m not going to. Instead I’m going to give you an alternative to the gesture-based user interface you’re used to with Kinect – speech recognition and voice navigation.

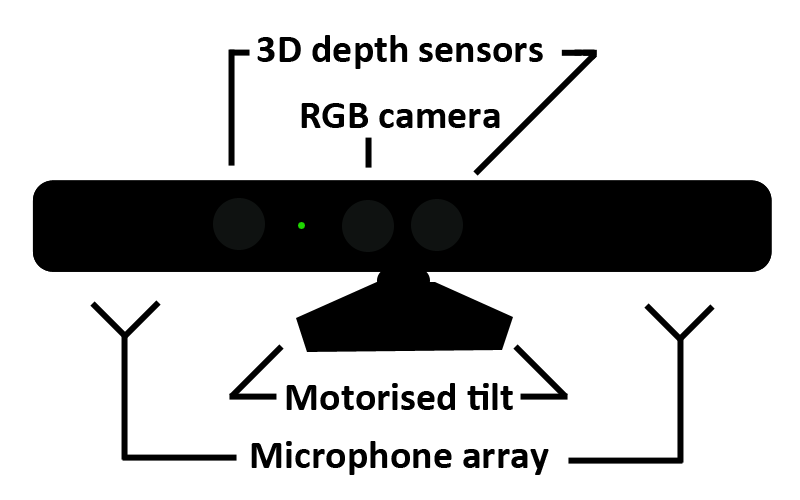

The Kinect’s set-up

Kinect is built with a four-piece microphone array. It’s not like the microphone on your laptop though. This microphone array allows you to cancel out background sound and also pinpoint the source of a sound within the Kinect’s environment. All that is processed on-board so you don’t have to really worry about it. For an example of that in action, run the ‘KinectAudioDemo’ application in the SDKs Samples folder.

The wonderful part of the SDK is that it gives you speech recognition for absolutely nothing. Speech grammars (essentially the commands your project can accept) have, for the most part, been built for you already within the SDK.

This post isn’t going to detail how to do speech recognition in your apps - there’s a great guide on KinectForWindows.org – but I am going to show you how it’s implemented in my project Moto.

Moto implementation

Moto listens out for specific keywords structured in my speech definition. There are keywords to start and stop listening, as well as a bunch of other functions.

private void recognizer_SaidSomething(object sender, SpeechRecognizer.SaidSomethingEventArgs e)

{

if (voiceNavEnabled) //If we are allowing speech commands at this time...

{

if (isListening && e.Verb != SpeechRecognizer.Verbs.StartListening && e.Verb != SpeechRecognizer.Verbs.StopListening && e.Verb != SpeechRecognizer.Verbs.YesSaid && e.Verb != SpeechRecognizer.Verbs.NoSaid)

{

confirmChoice(e);

//Display confirmation dialog

}

if (e.Verb == SpeechRecognizer.Verbs.StartListening)

{

voiceNavListening(true);

//Start Listening

}

else if (e.Verb == SpeechRecognizer.Verbs.StopListening)

{

voiceNavListening(false);

//Stop Listening

}

}

[...]

}What this does is, when it detects some sort of word has been spoken, checks the records it has for whether it matches any words I’ve assigned to actions. If it does and it doesn’t match any keywords to start or stop listening as well as any of the confirmation keywords, then submit a confirmation window for that.

This is what a confirmation window looks like at the moment. It’s obviously still in development phase, but it does the job.

If it detects what I said was a keyword for start or stop listening, then it will toggle the isListening boolean. isListening is used here to toggle whether it’s… well… listening or not. Running voiceNavListening() toggles that based on the value passed to it. voiceNavListening() can also have a second parameter passed to it that sets voiceNavEnabled, which is a kill-switch for any voice navigation in places you don’t want it happening.

That’s essentially is Moto’s current implementation of speech recognition. It’s not perfect and I’m not a C# expert but it’s getting there. I’ll let you know of further developments.