I’ve just come back from Future Decoded - the Microsoft-hosted event keen to show you what’s up next in the tech world. Weirdly, it all seems to run on Azure. That’s funny…

I did visit Future Decoded last year and very much enjoyed it. For a free event it was top quality so long as you look past a lot of the cloud service plugs. While last year was heavily focused on the potential for machine learning in industry, this year was more focused on the opportunities it presents the every day.

Opening Keynote

Similar to last year, the opening talks were an amalgamation of great thinkers and doers showing how the once space-age technology like AI and machine learning now have real-world applications.

Joseph Sirosh kicked things off with an overview of what problems tech like this is solving right now. From LASIK success rates to infant remote medical monitoring, technology allows us to make informed, important decisions instantly with the power of the cloud. With more devices connected together than ever, this collection of data can be used in new and even more exciting ways as time goes on.

There was one feature I hadn’t seen before that I was very surprised by. We’re talking eye-opening, jaw-dropping, in-the-future kind of thinking. Microsoft’s Cognitive Services, specifically their Video API, is top notch. It can detect faces, find out who they are (assuming they’re someone notable, of course), guess their age and their emotion across the video all by providing either a video file or even a link from YouTube. It’s crazy.

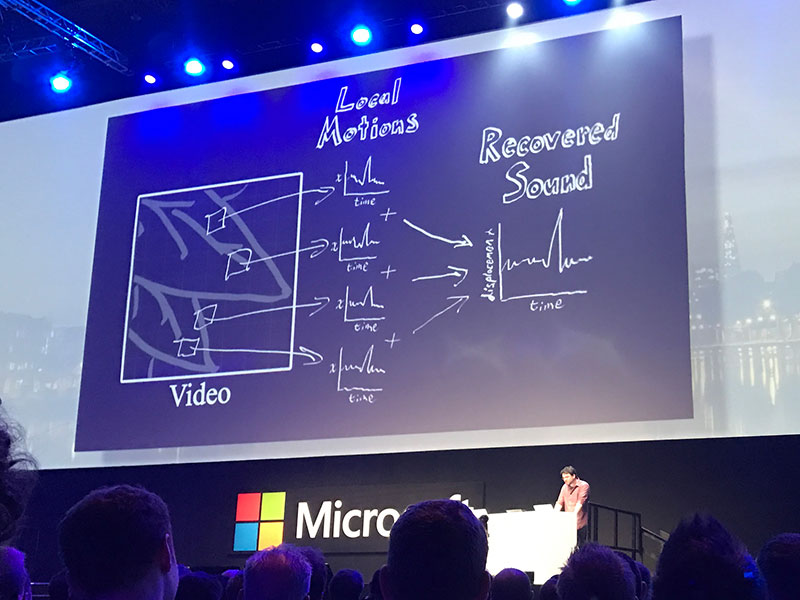

On a completely different tact, my favourite talk was given by Abe Davis from Stanford, who detailed how to capture information from vibrations. By filming the small movement in the leaves of plants you can recover the sounds in the room. A thoroughly interesting talk - if a little creepy. It ran along the lines of his TED Talk should you want to take a look.

Machine Learning on the Web

There wasn’t as much web this year as last year, which is a shame. The only one that caught my eye (mainly because some web service wasn’t crowbarred into the title) was Chris Heilmann and his talk on making machine learning more approachable and useful for the masses on the web.

It seems machine learning has come across to the public as chatbots in many guises with varying successes. The aim of the talk was to show where else we’re using it at the moment in more approachable and useful places. For example Facebook uses it to provide alt text to uploaded images. Detecting subjects in photos and making it more accessible for non-sighted users is just the sort of application machine learning should be striving to achieve.

Developing for Hololens

Unfortunately I missed out on the chance to play around with the Hololens - Microsoft’s spiritual successor to the Kinect in my eyes. While it’s very cool, I imagined it would be a nightmare to develop anything for. So I popped along to what was apparently the first ever developer session for Hololens, presented by Pete Daukintis.

Turns out there’s plenty of help available. There’s even a Hololens emulator, which can take pre-defined rooms scanned with either Hololens or Kinect to test with. By using provided kits you can either port UWP to the platform or build new programs in the likes of Visual Studio or Unity if you’re thinking in 3D.

All in all, it was another good show from Microsoft. Last year I came not knowing what to expect and coming back with my eyes opened. This time, I came away with some good ideas to work on even if there was nothing along the lines of quantum computing this time around.

Oh, and now I want a Surface Pro 4. For reasons.